Software Defined Traffic Engineering for Improving Quality of Service

Xiaoming Li, Jinyao Yan*, Hui Ren Key Laboratory of Acoustic Visual Technology and Intelligent Control System (Ministry of Culture) and Beijing Key Laboratory of Modern Entertainment Technology, Communication University of China, Beijing, 00024, China

2 Key Lab of Media, Audio and Video, Communication University of China, Beijing, 100024, China

* The corresponding author, email: jyan@cuc.edu.cn

I. INTRODUCTION

The Visual Networking Index report from Cisco Systems predicts that Internet video will account for 82 percent of Internet traffic worldwide in 2020[1]. Global IP traffic will increase roughly threefold over the next five years. With the dramatically increasing amounts of network traffic, traffic congestion happens often and the performance degrades in the network. For a long time in the past, the telecom operators increased the capacity of the network by improving the network hardware using high-speed switches and routers.However, this over-provisioning solution had a low and unbalanced utilization and can not solve the congestion of hot spots effectively.Congestion may appear in some links of network while the rest of the network might be light loaded or idle. Traffic engineering (TE)analyzes and predicts the traffic, manages the route of the traffic request to improve the network utilization and to optimize the overall performance of data network [2]. In the meantime, network congestions are eliminated[3][4] which is critical to multimedia services such as Netflix [5].

Multi-Protocol Label Switching (MPLS)[6], as a solution of traffic engineering, explicitly labels routing between source and destination which can control the traffic distribution.However, labels in MPLS TE are configured statically without real time reconfiguration ability and adaptability. Software Defined Networking (SDN) has become a promising solution to enhance the flexibility and adaptability of traffic engineering[7][8]. Network traffic can be flexibly scheduled by making use of the centralized network view and dynamic configuration capabilities of SDN. The global view includes the state of network, the utilization of the network and amount of current traffic which is maintained by the SDN controller. SDN based TE controls and manages the traffic in the network in real time, and obtains better QoS performance such as delay,throughput and bandwidth utilization.

In this paper, we study congestion-aware dynamic traffic engineering based on SDN and proposed a traffic scheduling algorithm called CATS to eliminate network congestion and to improve QoS. The main contributions of this paper are as follows:

1) We propose the definition of aggregated elephant flow sharing congested links and its detection algorithm for traffic engineering.

2) We propose an optimization model for traffic scheduling with a goal to minimize the variance of link utilization and QoS.

3) By using the chaos genetic algorithm to solve the optimization problem, we propose SDN-based congestion-aware traffic scheduling algorithm (CATS).

Simulations using video traffic traces show that our proposed traffic scheduling algorithm is able to effectively detect and eliminate network congestions in subsecond. In consequence, it improves the QoS for applications with lower packet loss rate and balanced link utilization.

The rest of this paper is organized as follows. Section II reviews related work of SDN based traffic engineering and QoS for multimedia services. Section III describe SDN-based congestion-aware traffic engineering including aggregated elephant flow detection algorithm, network model and optimization problem, and traffic scheduling algorithm. Experiments results are described and analyzed in section IV. Section V concludes the paper.

II. RELATED WORK

Traffic engineering has been an important mechanism and a research topic to improve the performance in Internet for decades. QoS routing algorithms are kinds of mechanisms of IP based traffic engineering to find optimal path in terms of network performance such as delay and bandwidth. OpenQoS [9] classifies the traffic into data and multimedia, and the routes of multimedia flows are optimized with a QoS routing algorithm. HiQoS [10] makes use of multiple paths between source and destination and queuing mechanisms to optimize QoS. Equal-Cost-Multi-path routing (ECMP)[11][12] is a solution to find multiple optimal routes which is considered more efficient than single path QoS routing. The main feature of ECMP is to equally split and forward flows to multiple paths for load balancing. The traffic load will be more balanced when the ECMP traffic scheduling is made in packet level instead of flow level. However, the bandwidth,latency, and reliability of each path may be not same in the actual network. WCMP (weighted Equal-Cost-Multi-path) routing [13] was proposed to split traffic in proportion to the capacity of paths, which is a special case of ECMP. This method is more complicated in the traditional switch with a high cost.

In the SWAN(software-driven wide area network)[14] architecture, SWAN enables software driven inter-DC WANs to carry significantly more traffic. SWAN leverages a small amount of scratch capacity on links to apply updates in order to ensure that there is no transient congestion. SWAN improves bandwidth efficiency while providing preference for higher-priority services and fairness among similar services. In the Cisco CON-GA[15] architecture, TCP streams are divided into multiple flow lets and flow lets are allocated to multiple paths. This enables CONGA to efficiently balance the load for data centers.HULA[16]} addresses the scalability issue of CONGA with P4-based switches maintaining congestion state for the best next hop per destination instead of all paths to a destination.

Micro Te [17] minimizes the maximum link utilization with some modifications to hosts in the data center. Hosts in Micro Te collect and report their traffic matrix to the SDN controller for optimization. Traffic Engineering in an incrementally deployed SDN environment is proposed in [18] where traditional networks are mixed with a small number of SDN enabled devices. In segment Routing, the routing path is broken up into segments. With the help of SDN, segment routing controls the traffic and online route selection in order to enable better network utilization and QoS [19][20]. In Hedera [21], a flow with flow rate more than 10% of the link capacity is considered as large flow. The SDN controller sends a traffic statistics request to edge switches in a certain period to detect the large flow. Whenever a large flow is detected then it is rescheduled to light loaded links that meet their bandwidth demand. By default, Hedera uses ECMP for flow forwarding. Different from Hedera, Mahout finds the large flow by detecting TCP buffer of end nodes[22]. In Hedera, flow is defined with the same 10-tuple of

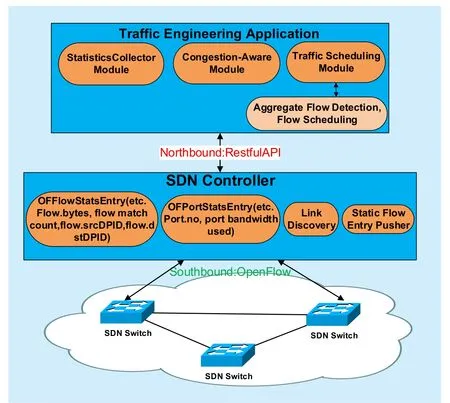

Fig. 1 The SDN-based traffic engineering

III. SDN BASED TRAFFIC ENGINEERING

As traditional traffic engineering could not adjust traffic dynamically, we study real-time and optimal traffic engineering based on SDN. The centralized controller in SDN first detects the aggregated flow traffic when link utilization is high and congestions happen, then computes the flow allocation on optimal routes and the forwarding rules for the traffic scheduling,finally distribute the flow rules to SDN switches. Compared to traditional traffic engineering,the main advantage of SDN based traffic engineering is: traffic are detected and rescheduled from global view rather than local view of the traffic and the utilization of links. Moreover,due to the flexibility of SDN, SDN based traffic engineering can detect and eliminate the congestion in real-time. As shown in figure 1,SDN-based traffic engineering includes three layers and interaction between them.

In this section, we first define the aggregated elephant flow and design the detection algorithm for aggregated elephant flows. Then we design the congestion-aware dynamic traffic scheduling including the network model,the optimization problem for balancing the link utilization and the overall QoS, and a traffic scheduling algorithm to solve the optimiza-tion problem.

3.1 Aggregated elephant flow detection

Traditional flow and traffic engineering are based on IP. SDN/OpenFlow [23] defines flows with various granularity such as source/destination switches, VLAN and application types. In Hedera, each elephant flow is a single large flow over a certain size (10% of the link) and duration, identified with the same 10-tuple of

1) flows share the congested link and share part of their path;

2) flows are video flows or big enough flows(for example: each flow is bigger than 1%of the congested link).

For example, we may bundle flows of video or voip traffic from/to one campus network as an aggregated elephant flow. Then we may want to provide QoS for the aggregated flow to upgrade the video and voip service for the campus costumer, or when we may want to retour those traffic to avoid the congestion link they sharing.

In this paper, we label the aggregated elephant flow with the source SDN/OpenFlow switch DPID or destination SDN/OpenFlow switch DPID of video flows for the simplicity reason. We assume that SDN/OpenFlow enabled switches and routers might coexist with traditional ones in the network. SDN controller communications with and controls only SDN/OpenFlow enabled switches. As long as Src_DPID or Dst_DPID is the same, they are in the same aggregated elephant flow, even their source IP and destination IP are not the same.

We make use of the passive detection of congestion in SDN/OpenFlow network. The SDN controller defines the congestion level for OpenFlow switches. For example, when the link utilization is higher than a threshold of 90%, then switches will send a congestion notification via the Packet_in message to the SDN controller [24]. The controller receives the message, and the message is parsed to obtain the following information:

1) The location of congestion by “switch ID”(data path identification or DPID);

2) Big flows on the congested link by “byte counters”.

The SDN controller calculates the counter of the flow meter of the congested switch to find flows that occupies more than certain amount of link utilization or video flows. In the following, we summarize our algorithm to detect the aggregated elephant flow for traffic engineering.

Algorithm 1: Aggregated elephant flow detection algorithm

Input: The flow Table entry set(OFFlow-StatsEntry) of the congested switch which is collected through the Statistics Collector module of the SDN controller.

Output: An aggregated elephant flow:ret=1 when a single flow as an elephant flow;ret=2 when aggregated flows as an aggregated elephant flow. The flow set flow set F in the aggregated elephant flow.

Algorithm 1 Aggregated elephant flow detection algorithm

Whenever congestion is detected, the switch sends a congestion notification to the controller via the Packet_in messages in the Open Flow protocol. As described above in the aggregated elephant flow detection algorithm,it is necessary to sort firstly all flows in the flow set of the switch flow table. Flows are sorted in terms of flow size or importance.Only important video flows may be considered for simplicity or in case of limit network resource. Then the algorithm detects a single flow or flows as an aggregated elephant flow when their flow.bytes ≥ THRESHOLD.

3.2 Traffic scheduling

As described above, flows in the aggregated elephant flow are detected by the aggregated elephant flow detection algorithm. In this subsection, we first find the set of optimal candidate path for the flow allocation. Then,we propose an optimization model for flow scheduling to optimize the link utilization and overall QoS. To solve the optimization problem, we use the chaos genetic algorithm to calculate the optimal distribution matrix for the aggregated elephant flow.

1) Network model: V is a set of all switch nodes, and E is a set of links between switches. The network is represented by a direct-ed-graph GVE=(,). The capacity Cuv(,) of a link from node u to v is defined as the physical bandwidth of the link. The flow request vector FRV is a set of flows to be allocated.Then the congestion-aware traffic scheduling is to find the optimal flow allocation matrix FAM for flows in FRV. The main notations are described in table 1.

Table I Notations in the model

Definition 1: Flow request vector FRV

Flow request vector FRV represents the set of flows to be allocated which includes flows in flow set F in aggregated elephant flow and incoming flows in traffic prediction. For traffic scheduling of the aggregated elephant flow in this paper, flow request vector FRV is the same as flow set F. Let N denotes the size of FRV.

Definition 2: Flow allocation matrix FAM

FAM is the flow allocation matrix for flow request vector FRV. Then FAM is a matrix of MN×, where M represents the number of candidate paths.

Definition 3: Optimal residual network Gr

The maximum feasible capacity of the optimal residual network indicates that the maxi-mum capacity in the residual network. We can solve the maximum fl ow problem using Ford Fulkerson algorithm [26]. In this model, the top-M optimal candidate path set CPS (candidate path set) is chosen as augmenting paths to get the maximum flow maxC(Gr). By definition, it satisfies the max fl ow/min cut theory.

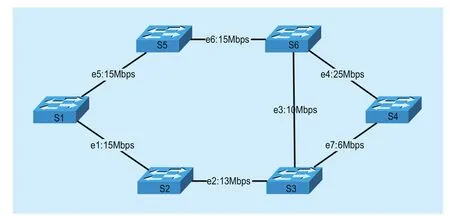

Condition 1 guarantees that the allocated flow rate of the link is less than the available bandwidth of the link. By definition,we can see that the maximum feasible flow is not necessarily the maximum admit table aggregate flow. As shown in figure 2,the optimal residual network is composed of the candidate optimal paths. The source is S1, destination is S4, there are 4 routes path path1={e1,e2,e3,e4}, path2={e5,e6,e4},path3={e1,e2,e7}, path4={e5,e6,e3,e7}.

According to the Ford Fulkerson algorithm can find the maximum feasible flow:28Mbps, assume fl ow request vector FRV is:[11,9,8,5]T, its maximum admit table aggregated flows: [11,8,5]24=Mbps.

As CPS is the optimal path set, there is no other path in the network with more available bandwidth than path in CPS. Therefore, the flows are not admit able to the current network.

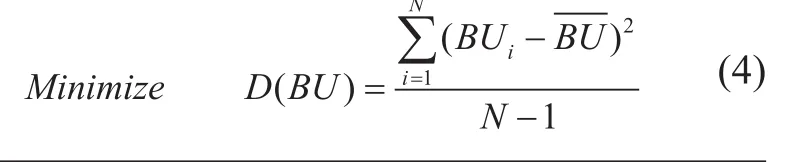

2) Optimization problem for flow scheduling: Existing objectives for traffic optimization are minimizing the maximum link utilization [27] and minimizing link cost [28].The latter may lead to uneven distribution of traffic in the network and traffic congestion in the low cost path. The objective in our optimization problem is close to the first one.However, we consider that the variance of link utilization ratio is an important index of the distribution of link bandwidth utilization as opposed to the average link utilization and the maximum link utilization which are not suffi-cient to show the balance of the link utilization.The smaller variance of link utilization, the closer utilization among links. Then, the overall utilization of the links is more balanced.The higher link utilization, the higher packet loss rate of the link. Given the certain amount of traffic, the balanced utilization of congestion links produces lowest overall packet loss rate. As a result, the overall performance and QoS of network are improved. Assumerepresents the bandwidth utilization of the link euv. The sample spaceSample variance isTherefore, the objective of flow reallocation is to minimize DBU().

Fig. 2 Optimal residual network composed of candidate optimal paths

We form the optimization problem as follows:

subject to∑i=1 M ij≤≤≤1,1 (Constr. 1)∑i=1 a jN M c maxC FRV c FAM FRV i1=accept i1( ),∀ ∈ ×(Constr. 2)∑flowuv(,) 0= (Constr. 3)vV∈

Constraint 1 guarantees that each flow can only choose one path; Constraint 2 guarantees that the flow allocation matrix can satisfy the maximum acceptable capacity of the existing candidate paths; Constraint 3 guarantees the flow conservation in the residual network. The net flow entering the node u is 0 except that u is the source or the terminal of flows;

As the optimization problem (4) is a 0-1 integrated linear programming problem, it is an NP-Hard problem [29]. Please note that if, then it indicates that the optimal candidate path can not meet all request of flows in the flow set.

Algorithm 2: Genetic algorithm for flow scheduling

3) Chaos genetic algorithm to solve the optimization problem: Because the mathematical model (4) is a combinatorial optimization problem of NP hard (in particular, 0-1 integrated linear programming problem). The genetic algorithm is more suitable for combinatorial optimization problem [30], and the crossover and mutation operators have typical combination features. In order to avoid the local optimal solution, we use the genetic algorithm based on chaotic map [31]. The parameters for the algorithm are set as follows:

Population number: pop size, each population represents a distribution matrix;

Genetic times: Genetic Num;

In this algorithm, the logistic function is used as the initial value of the chaotic map withwhere µ∈[0,4] is the parameter of logistic function. Our studies show that if x∈[0,1] , logistic mapping is in a chaotic state. And the iteration is consistent with a state of pseudo random distribution when µ is close to 4.

4) SDN-based congestion-aware traffic scheduling algorithm (CATS): First SDN controller collects the congestion switch OFF low Stats Entry by Open Flow module Statistics Collector. By aggregated elephant flow detection algorithm, when the return ret is 2, it triggers aggregated traffic scheduling algorithm which returns an aggregate flow allocation matrix. SDN controller generates the flow Table according to the matrix, and downloads flow Table to switches of candidate path.The pseudo-code description of the algorithm is as follows:

Gene crossover probability: 0.6;

Mutation probability: 0.1;

When the population is initialized, the logistic function is used as a chaotic map

IV. EXPERIMENTAL RESULTS AND ANALYSIS

As mentioned above, we use the chaos genetic algorithm to solve the flow scheduling problem when congestion detected, and flows are redistributed by the SDN controller. We conduct two sets of experiments. The first groups of experiments are video streaming in a wide area backbone network such as video for a live event. The second experiments are video file transferring among data centers of CDN.We use the virtual switch OVS, and fl oodlight v1.2 as controller which supports Open Flow 1.3. We use video traces [32] to generate live UDP video streams in the first experiments and transfer video files over TCP in the second experiments.

4.1 Experiments of video streaming in a wide area backbone network

The experimental topology of live video streaming is illustrated in figure 4. The OVS switches are S1, S2 … S7. The capacity of each link is 100Mbps. Video flows are steaming from server h8 to clients h1, h2 and h3 and streaming from server h9 to clients h4,h5, h6 and h7 respectively. By default, Dijkstra’s algorithm is used in the SDN controller for computing the shortest path. The shortest path from switch S1 to switch S4 is (8,9)ee.We use the 4K video trace of “Tears of Steel”for streaming. The video trace is obtained from the video trace website (trace.eas.asu.edu). The arrival interval of video flows follows Poisson distribution with λ=2. We use D-ITG [33] to generate the background flow traffic with a Pareto distribution on/off model,where the shape and scale parameters are set to 1.5 and 200 respectively.

Algorithm 3: SDN-based congestion-aware traffic scheduling algorithm (CATS)

Fig. 3 Network topology generated in Mini net

At the time of 14th second in our experiment, congestion occurs and is detected on link e9. The link e9 notifies the floodlight controller with Packet in_ message, then triggers our SDN-based congestion-aware traffic scheduling algorithm CATS. At time of congestion, the flow rates from server h8 to hh1,2 and h3 are 6.73Mbps, 6.82Mbps and 0.988Mbps respectively, and flow rates from server h9 to hhh4,5,6 and h7 are 6.8Mbps,8.68Mbps, 6.73Mbps and 0.165Mbps respectively. Table 2 shows the physical bandwidth and residual bandwidth for each edge. The remaining bandwidth of edge e9 is 43.6Mbps,namely background traffic is 56.4Mbps.However total flow rate allocated by OSPF is 36.913Mbps (where 6.73 + 0.165 + 0.988+6.82 + 6.8 + 8.68 + 6.73 =36.913). As a result, the utilization rate of link e9 reaches up to 93.3% ((36.913+56.4)/100). About 36.913%of the physical bandwidth is occupied by flows going from S1 to S4. Then, flows to h1,h2,h3,h4,h5,h6,h7 are aggregated as an ag-gregated elephant fl ow.

Table II Residual bandwidth in the backbone network

Fig. 4 Optimal residual network

We select the top 4 candidate paths from S1 to S4 based on pre-k shortest paths algorithm as follows.

The optimal residual network composed of the candidate paths is shown in figure 4.

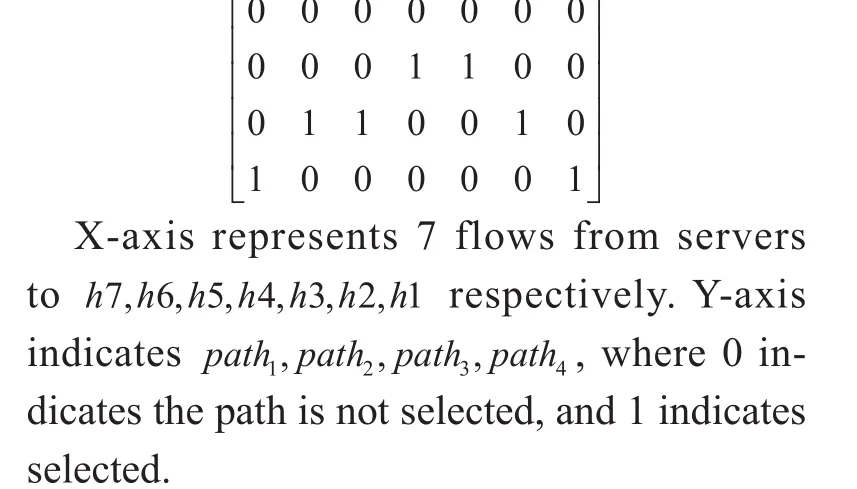

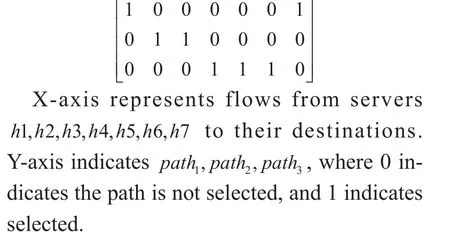

After the reallocation by our SDN-based congestion-aware traffic scheduling algorithm CATS, the flow allocation matrix FAM is as follows:

As ECMP is a commonly used method to balance the traffic in the data centers, we use ECMP with 4 candidate pathsfor comparison. We also compare the performance of the proposed algorithm with OSPF [34] which is based on Dijkstra’s algorithm. The experimental results of link utilization of all candidate paths are shown in figure 5. It can be seen from the figure, the variance of link utilization based on traditional OSPF is high, indicating that the link utilization is not balanced. Our algorithm is triggered at 14th second when congestion happened, and then it reduces the variance of link utilization. The variance of link utilization based on OSPF, ECMP and CATS right after 14th second is 0.032125, 0.008803 and 0.0036928 respectively. The link utilization improved with our proposed algorithm . There is no fully loaded link and no idle link with our algorithm. The overall variance of link utilization based on OSPF, ECMP and CATS is 0.040519,0.023269,0.022705 respectively.The overall variance of link utilization based on ECMP and our proposed algorithm are almost the same.

Figure 6 shows the link utilization of link e9 with OSPF, ECMP and CATS. It can be seen from the figure that based on the traditional OSPF algorithm, the utilization rate of the e9 at the 14th second link is as high as 93.3085%, and in most cases the link utilization is kept close to 100%, which will produce high packet loss for video streaming. In our proposed algorithm, the link utilization over 90% triggers CATS scheduling algorithm. As shown in the figure, the utilization of link e9 is significantly decreased after scheduling.From figure 5 we can see that ECMP can balance the traffic significantly better than OSPF because ECMP splits traffic based on flows on four paths while OSPF does not balance the traffic. However, the ECMP algorithm does not take into account the volume of flows and the real time state of the link. Therefor the link utilization reaches up to 100% sometimes.

From figure 7, we can see packet loss rate on link e9 with traditional OSPF algorithm,ECMP and CATS. Packet loss occurs most often with OSPF routing algorithm. This is because OSPF does not take into account the current link state and traffic load balance. Our proposed algorithm CATS performs best in terms of packet loss rate.

The convergence of the chaotic genetic algorithm that we proposed is shown in figure 8.The algorithm converges after 1.5362 seconds in the sixtieth generation with the utilization variance of 0.0036928, which is close to the optimal solution. Indeed, the scheduling result is good enough within a second and reduce the traffic congestion in real time. We compare the performance of chaotic genetic algorithm with simulated annealing algorithm to solve the same problem. In the simulated annealing algorithm, we set the initial temperature T0=1000, termination temperature Tend=e−3,chain length L=200 and cooling rate q=0.9.As shown in figure 8, the simulated annealing algorithm is close to the optimal solution after 10.558170 seconds in the sixty-fifth generation with the result of 0.0036928. Therefore, we propose the chaotic genetic algorithm to solve the optimization problem of traffic scheduling as the convergence time is critical for real time traffic scheduling.

Fig. 5 Variance of link utilization of candidate paths

Fig. 6 Bandwidth link utilization of link e9

4.2 Experiments of video file transferring among data centers

In this set of experiments, we setup our experimental topology in figure 9 the same as Google B4 topology [35] which provides connectivity among Google data centers worldwide.However we scale down the bandwidth and delay for simplicity in Mininet.

Fig. 7 Packet loss rate of link e9

Fig. 8 Convergence time

In our experiments, we use Iperf to send TCP flow to simulate video file transferring and data synchronization. Flows go from servers h1,h2,h3...h 7 to h8,h9 through switches in the network. Links are measuring the traffic for congestion. Table 3 shows the physical bandwidth and residual bandwidth for each edge of Google B4 topology. When link e2 is congested at 20th second in our experiments,it sends Packet_in messages to notify SDN controller, then will trigger the SDN-based congestion-aware traffic scheduling algorithm CATS. The flow rates of video file flows are FRV={1.93,1.68,1.44,1.31,0.762,0.989,1.32} in Mpbs at the time of 20th second. CATS algorithm computes the top 3 candidate paths from S6 to S10 based on k-shortest paths algorithm as follows: path1={e6,e7,e8}, path2={e1,e2},path3={e3,e4,e5}. Then, the optimal residual network composed of the best candidate paths is shown in figure 10.

After the reallocation by our SDN-based congestion-aware traffic scheduling algorithm CATS, the flow allocation matrix RAM is as follows:

Table III Residual bandwidth in the network among data centers

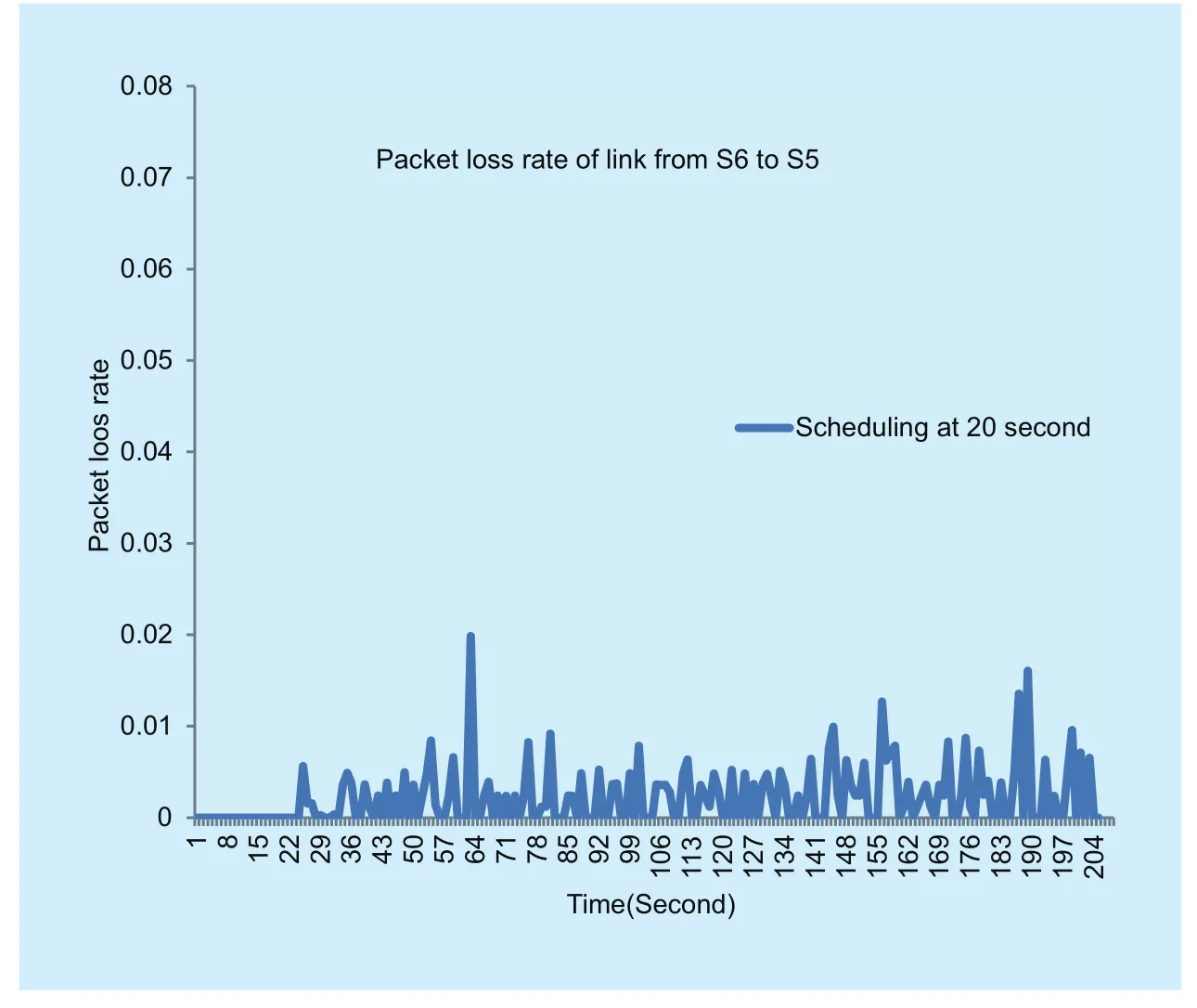

After the flow scheduling, the variance of link utilization is 0.0028988 and TCP packet loss rate is reduced as shown in figure 11.From figure 11, we can see that when the link e2 from S12 to S10 congested around at the 20th second, it triggers our proposed traffic scheduling algorithm. We find that the packet loss rate of the congestion link is obviously reduced. The link from S6 to S5 is not used before traffic scheduling in the beginning. When the traffic scheduling algorithm CATS is triggered at the 20th second, TCP flows from h1 ... h7 are reallocated to path1with the link from S6 to S5, then introduce packet loss on the link e6 from S6 to S5 as shown in figure 12.

As the aggressiveness of TCP congestion control algorithm, the packet loss rate will never go to zero at the bottleneck link. However we balance the link utilization with the increase of overall link utilization and the reduction of the overall congestion. Indeed, the overall file transmission time is also reduced.Therefore, the overall performance and QoS in the network is much improved with our proposed SDN traffic scheduling algorithm.

V. CONCLUSION

In this paper, we study the SDN based traffic engineering to improve the QoS for applications such as video streaming and video file transferring. We propose traffic scheduling using SDN to redistribute flows when congestion happens. We introduce first a new concept of aggregated elephant flow and a method to detect aggregate elephant fl ow for scheduling.Then we propose a new traffic optimization model and form into an optimization problem with the objective to minimize the variance of link utilization and to improve overall QoS.Since the optimization problem is an NP-hard problem, we propose the use of genetic algorithm based on chaos theory to solve the optimization problem.

Fig. 9 The simulation topology of Google B4

Fig. 10 Optimal residual network in video le transferring

Fig. 11 TCP packet loss rate of link from S12 to S10 before and after trac scheduling

Fig. 12 Packet loss rate of link from S6 to S5 before and after trac scheduling at the 20th second

ACKNOWLEDGEMENT

This work is partly supported by NSFC under grant No.61371191 and No.61472389.

[1] “Cisco visual networking index.,” 2016. http://www.cisco.com/c/en/us/solutions/service- provider/visual-networking-index-vni/index.html.

[2] N. Wang, K. Ho, G. Pavlou, and M. Howarth, “An overview of routing optimization for inter net traffic engineering,” IEEE Communications Surveys and Tutorials, vol. 10, no. 1, pp.36– 56, 2008.

[3] D. Awduche, A. Chiu, A. Elwalid, I. Widjaja, and X. Xiao, “Overview and principles of internet traffic engineering,” Heise Zeitschriften Verlag,vol. 121, no. 6, pp. 239–242, 2002.

[4] D. Awduche, J. Malcolm, J. Agogbua, M.O’Dell, and J. Mc- manus, “Requirements for traffic engineering over mpls,” Jour- nal of evidence-based social work, vol. 10, no. 2, pp.63–72, 1999.

[5] “Netfl ix.” https://www.netfl ix.com.

[6] D. O. Awduche and B. Jabbari, “Internet traffic engineering us- ing multi-protocol label switching (mpls),” Computer Networks, vol. 40, no. 1,pp. 111–129, 2002.

[7] M. Casado, M. J. Freedman, J. Pettit, J. Luo, N.Mckeown, and S. Shenker, Ethane: taking control of the enterprise. ACM, 2007.

[8] T. Dave, “Openfl ow: Enabling innovation in campus networks,” Acm Sigcomm Computer Communication Review, vol. 38, no. 2, pp. 69–74,2008.

[9] H. E. Egilmez and A. M. Tekalp, “Distributed qos architectures for multimedia streaming over software defined networks,” IEEE Transactions on Multimedia, vol. 16, no. 6, pp. 1597–1609,2014.

[10] J. Yan, H. Zhang, Q. Shuai, B. Liu, and X. Guo,“Hiqos:an sdn- based multi path qos solution,”China Communications, vol. 12, no. 5, pp. 123–133, 2015.

[11] C. Hopps, Analysis of an Equal-Cost Multi-Path Algorithm. RFC Editor, 2000.

[12] A. Dixit, P. Prakash, Y. C. Hu, and R. R.Kompella, “On the impact of packet spraying in data center networks,” in INFOCOM, 2013 Proceedings IEEE, pp. 2130–2138, 2013.

[13] J. Zhou, M. Tewari, M. Zhu, A. Kabbani, L.Poutievski, A. S- ingh, and A. Vahdat, “Wcmp:weighted cost multipathing for improved fairness in data centers,” in European Conference on Computer Systems, p. 5, 2014.

[14] C. Y. Hong, S. Kandula, R. Mahajan, M. Zhang, V.Gill, M. Nanduri, and R. Wattenhofer, “Achieving high utilization with software-driven wan,” ACM SIGCOMM Computer Com- munication Review,vol. 43, no. 4, pp. 15–26, 2013.

[15] M. Alizadeh, T. Edsall, S. Dharmapurikar, R.Vaidyanathan, K. Chu, A. Fingerhut, V. T. Lam, F.Matus, R. Pan, and N. Ya- dav, “Conga: distributed congestion-aware load balancing for datacenters,” Acm Sigcomm Computer Communication Review, vol. 44, no. 4, pp. 503–514, 2015.

[16] N. Katta, M. Hira, C. Kim, A. Sivaraman, and J.Rexford, “Hula:scalable load balancing using programmable data planes,” pp. 1–12, 2016.

[17] T. Benson, A. Anand, A. Akella, and M. Zhang,“Microte:fine grained traffic engineering for data centers,” in Conference on Emerging NETWORKING Experiments and Technologies, p- p.1–12, 2011.

[18] S. Agarwal, M. Kodialam, and T. V. Lakshman,“traffic en- gineering in software defined networks,” in INFOCOM, 2013 Proceedings IEEE, pp.2211–2219, 2013.

[19] R. Bhatia, H. Fang, M. Kodialam, and T. V. Lakshman, “Op- timized network traffic engineering using segment routing,” in Computer Communications, pp. 657–665, 2015.

[20] S. Bidkar, A. Gumaste, P. Ghodasara, and S. Hote,“Field trial of a software defined network (sdn)using carrier ethernet and segment routing in a tier-1 provider,” in Global Communications Conferece, pp. 2166–2172, 2014.

[21] M. Al-Fares, S. Radhakrishnan, B. Raghavan, N.Huang, and A. Vahdat, “Hedera: dynamic flow scheduling for data center networks,” in Usenix Symposium on Networked Systems Design and Implementation, NSDI 2010, April 28-30, 2010,San Jose, Ca, Usa, pp. 281–296, 2010.

[22] A. R. Curtis, W. Kim, and P. Yalagandula, “Mahout: Low- overhead datacenter traffic management using end-host-based elephant detection,” in INFOCOM, 2011 Proceedings IEEE, pp.1629–1637, 2011.

[23] “The openflow switch,” 2014. http://www.opennetworking.org.

[24] L. Long, F. Binzhang, and C. mingyu ., “Nimble:A fast flow scheduling strategy for open flow networks.,” Journal of Com- puter Science, vol.38, pp. 1056–1068, 2015.

[25] J. Y. Yen, “Finding the k shortest loopless paths in a network,” Management Science, vol. 17, no.11, pp. 712–716, 1971.

[26] J. Ford, L. R. and D. R. Fulkerson, “Maximal flow through a network,” Canadian Journal of Mathematics, vol. 8, no. 3, pp. 399–404, 1956.

[27] J. Kennington and A. Madhavan, “Optimization models and algorithms for minimizing the maximum link utilization in ospf data networks,”2007.

[28] R. M and P. P, “Handbook of optimization in telecommunica- tions,” Springer Science +Business Media., pp. 679–700, 2006.

[29] H. Williams, Logic and integer programming. International Se- ries in Operations Research and Management Science, Springer US, 2009.

[30] W. Lin., Intelligent optimization algorithm and its application. Tsinghua University Press, 2001.

[31] M. Javidi and R. Hosseinpourfard, “Chaos genetic algorithm instead genetic algorithm,”International Arab Journal of In- formation Technology, vol. 12, no. 2, pp. 163–168, 2015.

[32] P. Seeling and M. Reisslein, “Video traffic characteristics of modern encoding standards:H.264/avc with svc and mvc exten- sions and h.265/hevc,” The Scientific World Journal, vol.2014, p. 189481, 2014.

[33] M. E. Crovella and A. Bestavros, “Self-similarity in world wide web traffic: evidence and possible causes,” IEEE/ACM Trans- actions on Networking,vol. 5, no. 6, pp. 835–846, 1997.

[34] J. Moy, “Rfc 2328: Ospf version 2,” vol. 28, p. 1,1998. http- s://tools.ietf.org/html/rfc2328.

[35] S. Jain, A. Kumar, S. Mandal, J. Ong, L. Poutievski, A. Singh, S. Venkata, J. Wanderer, J. Zhou,and M. Zhu, “B4: experience with a globally-deployed software defined wan,” Acm Sigcomm Computer Communication Review, vol. 43, no. 4,pp. 3–14, 2013.

- China Communications的其它文章

- Migration to Software-Defined Networks: the Customers’ View

- Towards a Dynamic Controller Scheduling-Timing Problem in Software-Defined Networking

- Efficient Virtual Network Embedding Algorithm Based on Restrictive Selection and Optimization Theory Approach

- A Fast and Memory-Efficient Approach to NDN Name Lookup

- Energy-Efficient Joint Content Caching and Small Base Station Activation Mechanism Design in Heterogeneous Cellular Networks

- Dual Power Allocation Optimization Based on Stackelberg Game in Heterogeneous Network with Hybrid Energy Supplies