Interactive Photo-Browsing System Based on Moving Target Detection*

Xu Yong Zhuo Jun-bao Tian Xing Bu She-hui

(School of Computer Science and Engineering,South China University of Technology,Guangzhou 510006,Guangdong,China)

0 Introduction

The technology of object tracking from video includes three types of methods,namely contrast analysis,movement detection and matching method.The matching method and the contrast analysis both deal with a single frame,and the difference between these two methods is that the former needs the information transfer between two adjacent frames while the latter does not.In contrast,the method of movement detection requires multiple frames and depends on the accuracy of background construction.Due to the complexity of scene,unpredictable factors and other interferences(the significant change of the light intensity for example),the background model is very difficult to construct.In order to overcome these disadvantages,this paper introduces the Gauss mixture model and makes some improvements to build a photo-browsing system.

1 System Overview

The implementation of this photo-browsing system is based on the gesture recognition of users.To realize this function,three implementation steps are needed.

Step 1 Moving objects are extracted from the background scene,and the Gaussian mixture model can be used to construct the background model.

Step 2 The skin segmentation algorithm is employed to extract the hand object,and the irrelevant objects are discarded.

Step 3 In order to get accurate location of the target extracted in Step 2,the Canny edge detection al-gorithm is used to complete and smooth the edge of the hand object.

The details of the system are discussed in Sections 2,3 and 4.The implementation and experiments of the system are based on Visual C++Studio and OpenCV.OpenCV is an open source(see http://opensource.org)computer vision library available for http://SourceForge.net/projects/opencvlibrary[1].

2 Moving Target Extraction

2.1 Moving Target Detection Based on Gaussian Mixture Model

In complex scenes,the value of a pixel at the same location may changes between adjacent frames.So that a complicated model such as Gaussian mixture model(GMM)[2]is required to characterize the value of the pixel.

Assuming that a pixel can be denoted by d-dimension vector x and Gaussian probability distribution functions(Gaussian PDF)can be denoted as

Where m is the mean and Σ is the covariance matrix.is the circumference ratio.

The corresponding GMM for a pixel can be denoted by

Where wi(wi> 0,∑wi=1)is the weight of each Gaussian PDF and K is the number of the Gaussian PDF(usually 3≤K≤5).

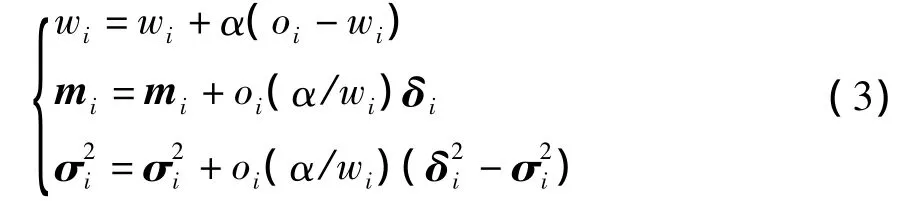

Initially,for each pixel in the first captured frame,only the first Gaussian PDF is assigned to meaningful values:the weight is assigned to 1,and the mean is assigned to the values of the pixel.The parameters of the rest Gaussian PDFs are assigned to 0.The variances of the K Gaussian PDF are all assigned to a specified value σ0.When a succeeding frame is added,every pixel is re-determined the following GMM(one of the K Gaussian PDF),and the corresponding Gaussian PDF'parameters are iteratively updated as

Where δi=x-mi,α is a constant to limit the influence of the old data,oiis set to 1 if x matches the corresponding Gaussian PDF,while“match”means the Mahalanobis distance from x to the corresponding Gaussian PDF is,for example,less than three standard deviations,otherwise oiis set to 0.The weights are sorted in an ascending order.If there is no“match”Gaussian PDF,the Gaussian PDF with the smallest weight is updated with the weights of wi=α,mi=x,oi=os,osis a specified value.

Bayesian decision rule[3]is used to determine whether a pixel is a part of background or foreground.Letting FG and BG denote the foreground and the background,respectively,the Bayesian formulas are defined as follows:

Assuming that P(FG)=P(BG)and that the probability distribution of the foreground follows uniform distribution,that is,P(xFG)=c(c is a constant,0<c< 1).If P(xBG)>c,the pixel is considered as the background,otherwise,the pixel is considered as the foreground.An example of moving target detection based on GMM is shown in Fig.1.

Fig.1 An example of moving target detection based on GMM

2.2 Improvement for Moving Target Detection

The traditional Gaussian mixture model is constructed with the pixel values in RGB color space.However,the performance of foreground extraction is always affected by the variance of illumination.So that an improvement is made here,in which RGB color space is converted into YCbCr color space,and only the Cb and Cr values are used to construct the Gaussian mixture model.A comparison experiment is also conducted to verify the efficiency of the proposed improvements and the results are shown in Section 5.

3 Skin Segmentation

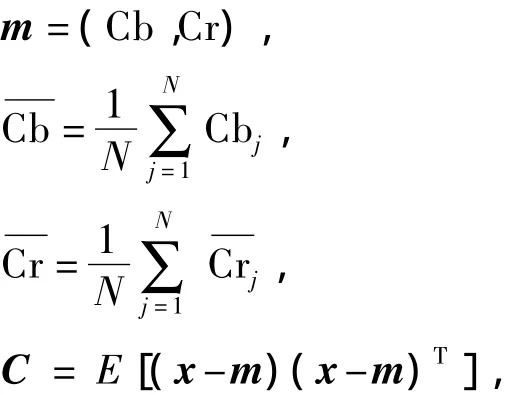

As the distribution of skin color pixels in Cr/Cb subspaces is similar to the Gaussian distribution[4]illustrated in Fig.2[5],for each pixel,the probability of the pixel locating in the skin area can be calculated as

P(x)=exp[-0.5(x-m)TC-1(x-m)](6)where x is the vector of pixels composed of Cb and Cr,m is the vector of the means of Cb and Cr,

Fig.2 Distribution of skin color in Cr/Cb subspaces[5]

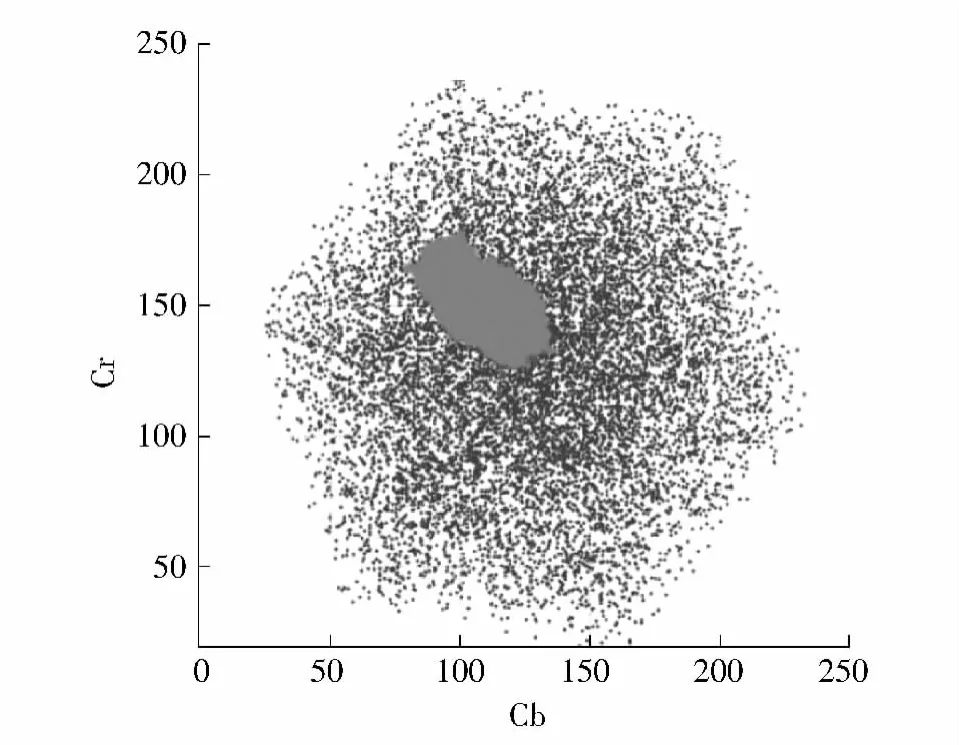

and N is the number of the pixels in the image.The probability of each pixel is calculated with Eq.(3)and is compared with a specified threshold.If the former is greater than the latter,the pixel can be considered as a part of the skin,as shown in Fig.3.

Fig.3 An example of skin segmentation

4 Edge Detection

4.1 Calculation of the Intensity Gradient of Image

According to the fact that the edge of an image may point in a variety of directions,the Canny algorithm[6-10]uses four filters to detect horizontal,vertical and diagonal edges of the blurred image.Edge gradient G and direction angle θ can be determined by the first derivative in the horizontal direction(Gx)and the vertical direction(Gy),that is

Edge direction angle θ is rounded to one of four angles representing vertical,horizontal and the two diagonals.

4.2 Non-Maximum Suppression

The gradient with large magnitude is insufficient for determining whether the corresponding pixel is a part of the edge.The pixel composed of an edge should be a local maximum in the gradient direction,as shown in Fig.4.

The local maximum should be located in the oblique line,that is,besides C,dTmp1 and dTmp2 can also be local maximum.If the magnitude of C is smal-ler than dTmp1 or/and dTmp2,C is not a part of the edge.

Fig.4 Schematic diagram of non-maximum suppression

4.3 Tracing of Edges Through Image and Hysteresis Thresholding

Thresholding with hysteresis[10]requires two thresholds,one is high and the other is low.Here,important edges are assumed to be along continuous curves in the image,which allows to follow a faint section of a given line and to discard a few noisy pixels that do not constitute a line but possess produced large gradients.The edges can be marked out fairly and genuinely by applying a high threshold.Based on the edges marked out by applying a high threshold,using the directional information derived earlier,edges can be traced through the image.While when tracing an edge,the lower threshold is employed to allow people to trace faint sections of edges as long as a starting point is found.

5 Experiments and Discussion

5.1 Comparison Experiment

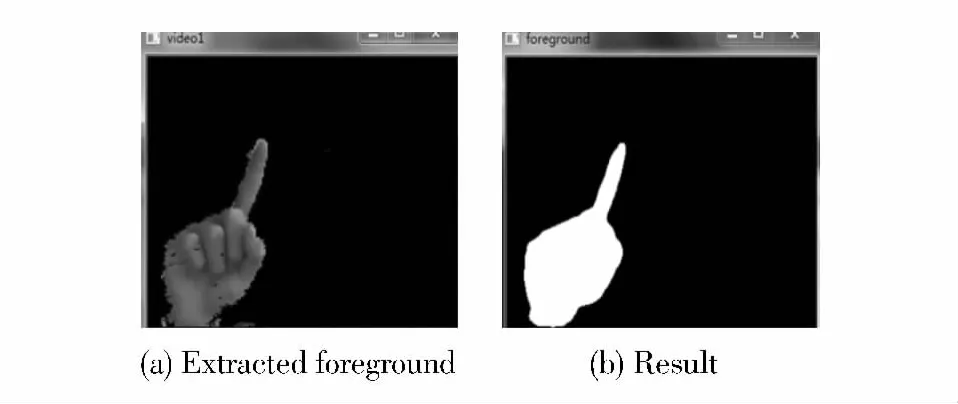

A comparison experiment was conducted to verify the robustness of the proposed method to illumination variance(especially significant change).Because of the limitation of experimental equipment,the illumination variance of the scene was simulated by moving user's hand close to the camera.

Fig.5 Comparison of foreground extraction in RGB and YCbCr color spaces

A foreground extraction with significant illumination variance in RGB color space is carried out,and the results are shown in Fig.5(a).As a contrast,a foreground extraction in YCbCr color space is also carried out under the same condition,and the corresponding results are shown in Fig.5(b).Apparently,the whole scene is tested and extracted as foreground with significant illumination variance,as shown in Fig.5(a),while the foreground extraction result is slightly affected,as shown in Fig.5(b).

When a user browses photos,his hand usually moves in the near front of the camera,which always produces illumination variance of the hand and affects the accuracy of the system.That is why YCbCr color space is used in this photo-browsing system.According to the above-mentioned comparison results,it is apparent that the proposed method is more robust to light variance,which is greatly helpful for the following implementation in this system.

5.2 Function Test

A function test is implemented by running the photo-browsing system in practical.From Fig.6(a),it can be observed that the moving target locates in the position near the center of an image in the beginning,and the target is identified by the circle.When the target moves to the position near the left border of an image,the target can still be identified by the circle correctly,as shown in Fig.6(b).Based on the results of the function test,it is concluded that the accuracy of locating the target meets well with the expectation of the system.

Fig.6 An example of function test for the location of moving hand

6 Conclusions

According to the illustration of the proposed method and the corresponding experimental results,it is concluded that the proposed system is robust to significant illumination variance(significant illumination variance usually makes the system does not work in our previous development work).The proposed system should be continuously improved in the future.

[1]Gray Bradski,Adrian Kaehler.Learning OpenCV:computer vision with the OpenCV library[M].California:O'Reilly Media,Inc,2008:1-14.

[2]Zoran Zivkovic.Improved adaptive Gaussian mixture model for background subtraction[C]∥Proceedings of the 17th International Conference on Pattern Recognition.Washington D C:IEEE Computer Society,2004:28-31.

[3]Richard O Duda,Peter E Hart,David G Stork.Pattern classification[M].Beijing:Publishing House of Electronics Industry,2003:16-18.

[4]Kong Wanzeng,Zhu Shan'an.A new method of single color face detection based on skin model and Gaussian distribution[C]∥Proceedings of the 6th World Congress on Intelligent Control and Automation.Dalian:IEEE,2006:261-265.

[5]Rein-Lien Hsu,Mohamed Abdl-Mottaleb,Anil K Jain.Face detection in color images[J].IEEE Transaction on Pattern Analysis and Machine Intelligence,2002,24(5):696-706.

[6]Rafael C Gonzalez,Richard E Woods.Digital image processing[M].3rd ed.Beijing:Publishing House of Electronics Industry,2010:741-747.

[7]Ziou D,Tabbone S.Edge detection techniques:an overview [J].International Journal of Pattern Recognition and Image Analysis,1998,8(4):537-559.

[8]Canny J.A computational approach to edge detection[J].IEEE Transactions on Pattern Analysis and Machine Intelligence,1986,8(6):679-698.

[9]Lindeberg Tony.Edge detection and ridge detection with automatic scale selection[C]∥Proceedings of the 1996 Conference on Computer Vision and Pattern Recognition.Washington D C:IEEE,1996:465-470.

[10]Ding Lijun,Goshtasby Ardeshir.On the Canny edge detector[J].Pattern Recognition,2001,34:721-725.