A Novel Ensemble Backtracking Search Algorithm for Numerical Optimization

ZHANG Yan,ZOU Feng,CHEN Debao

(School of Physics and Electronic Information,Huaibei Normal University, 235000,Huaibei,Anhui,China)

Abstract:To deal with the single evolution mode of the backtracking search algorithm(BSA),a novel ensemble backtracking search algorithm(EBSA)is proposed by integrating multiple mutation learning strategies into the BSA by an adaptive selection mechanism.In EBSA,four new mutation learning strategies are designed by using global and local information on the basis of the original mutation learning strategy.During the evolution processes of EBSA,the selection probability of the mutation learning strategy is calculated based on the changes of individual fitness values,and then the mutation learning strategies are determined by roulette wheel selection.To evaluate the performance of EBSA,it is compared with BSA and its variants on CEC2014 test suit.The simulation results indicated EBSA has good optimization performance compared with other algorithms.

Key words:ensemble learning strategy;backtracking search algorithm;roulette wheel selection;CEC2014 test suite

0 Introduction

Backtracking search algorithm (BSA) is a population- based heuristic algorithm proposed by Civicioglu[1],and it is widely used in optimization problems[2-3].However,the original BSA also has some drawbacks during evolution.First of all,the learning ability of individuals in the population is weak,and the historical information and current population are used to update the location of all individuals,so the best information cannot be fully utilized.In addition,if the diversity of population disappears during evolution,the population is easy to fall into local optimum.In order to maintain population diversity,the learning ability of operators also needs to be improved.Therefore,researchers have conducted many studies to improve BSA.

To balance the exploitation capability and search capability of BSA,Nama et al.[4]proposed a quantum mutation-based backtracking search algorithm that modifies the BSA framework(gQR-BSA)to proficiently search the entire search space.In gQR-BSA,a quantum Gaussian mechanism is developed based on the optimal population information mechanism to improve the population distribution information.Wang et al.[5]proposed an adaptive backtracking search optimization algorithm and combined it with the improved Hook Jeeves pattern search for numerical global optimization.It is divided into two stages.BSA is used in the exploration phase,and the improved pattern search method is used in the development phase.In order to optimize the loading scheme in heat treatment of castings,and to obtain the best charging plan for each feasible candidate set,Zhou et al.[6]proposed an improved backtracking search algorithm(IBSA)for casting heat treatment charge plan problem.To improve the development and exploring capabilities of IBSA,the historical population update mechanism has been modified.The differential evaluation algorithm,greedy local search algorithm and the mutation and cross mixing strategy of the re-initialization operator are improved.Mishra et al[7].proposed an effective method for accurately estimating the behavior of nonlinear systems.This paper is devoted to the accurate identification of non-linear tank level system by BSA.Chatzipavlis et al.[8]designed a new backtracking search algorithm to optimize the neuro fuzzy neural network.The network is composed of multiple levels,which operate in turn and establish the input-output relationship based on the first-order fuzzy rules.Due to the uncertainty and strong non-linearity of the pump turbine governing system(PTGS),parameters are difficult to identify.In order to solve this problem,Zhou et al.[9]combined the original BSA with orthogonal initialization technology,chaotic local search operator,elastic boundary processing strategy and adaptive variable scale factor.Chen et al.[10]proposed learning backtracking search optimization algorithm and its application(LBSA).In this paper,the global optimal information is combined with the historical information to update the individual randomly,and the other individuals update by learning the optimal individual,the worst individual and the contemporary random individual,which makes the individual convergence faster.Zou et al.[11]proposed hybrid hierarchical backtracking search optimization algorithm and its application (HHBSA).In this paper,two- layer population hierarchy and random recombination strategy were added into BSA to improve population diversity.When the evolutionary population enters the stagnation stage,mutation strategy is used and adaptive control parameters are proposed to improve the learning ability of BSA.To improve BSA optimization performance,Chen et al.[12]proposed backtracking search optimization algorithm based on knowledge learning(KLBSA).In this paper,based on the global and local information of the current population,the control parameters are designed,and the search step size of individuals is adaptively adjusted to improve the exploration and development ability of the balance algorithm.

In recent years,ensemble learning has attracted the attention of many scholars[13-14].Ensemble is the combination of multiple learning strategies.Integrating multiple strategies together can learn from each other,foster strengths and avoid weaknesses,so that they can achieve unexpected results and accomplish learning tasks together in a more effective way.Based on this view,a novel ensemble backtracking search algorithm(EBSA)for numerical optimization is proposed in this paper which is composed of multiple mutation learning strategies and the probability of mutation learning strategies is based on individual fitness value and roulette wheel selection to improve the optimization preference of BSA.In addition,different individuals are added to achieve a relatively balanced effect on population diversity and convergence speed.

1 Original BSA method

Similar to most meta heuristics,BSA is a population-based heuristic algorithm[15].The process of BSA can be summarized into five steps:initialize population,selection-I,mutation,crossover and selection-II.

Step 1:Initialize the populationXand the historical populationY.The initialization of the two populations is as follows:

wherei=1,2,…,N,j=1,2,…,D,NandDare the population size and the dimension of the population.lowjand upjrepresent the upper and lower bounds of individuals andU(·) is a uniformly distributed random function.

Step 2:Selection-I.This step is the starting point of algorithm iteration.Firstly,judge whether the current population is updated in this iteration according to Eq.(3)and then sort it randomly by Eq.(4).This mechanism ensures that BSA can select a generation as the historical population randomly and redefine the new search direction.

wheretrepresents the current iteration number,m1andm2are two random numbers.The new historical population is selected from current population and historical population.

Step 3:Mutation.The search direction matrix(Y-X)uses the difference information between the current population and historical population,and the amplitude of the search direction matrix is controlled by parameterF.

whereFis the mutation control parameter,Fis set to 3*randn,randn is the random number of standard normal distribution.Hence,the mutation step is determined byFso as to achieve the mutation effect by learning from the current population and the historical population.

Step 4:Crossover.A complex non-uniform crossover strategy is designed.Firstly,the binary integer matrix map ofN*Dis generated,as shown in the Eq.(6).If thejth dimension value of theith individual is 1,thejth dimension value of theith individual of the population will be updated to the corresponding position of the test population,as shown in Eq.(7).

whereuis a random integer value series withDdifferent values among[1,D],canddare random numbers between(0,1),Ni,jrepresents theith individual,the iteration istand the dimension isj.

Step 5:Selection-Ⅱ.The selection-Ⅱof BSA selects individuals with small fitness value to enter the next iteration until the stop condition of the algorithm is met,where fitness(Ni,j),fitness(Xi,j)represent fitness values ofNi,jandXi,jin Eq.(8).

After updating the current population in selection-II,BSA returns to selection-I for the next iteration if the algorithm termination condition was not met.

2 Ensemble BSA method

Using different individual models and some integration strategies to improve the performance of the whole model has been proved to overcome this problem effectively[16].Ensemble learning is one of the relatively active topics in the machine learning field.Integrating multiple different mutation strategies can produce good performance.

Like the BSA,EBSA initializes a current population and a historical population containingN Ddimensional individuals and calculate the fitness value of current population individuals.Then selection-I,the historical population is updated and sorted randomly.Initialize parameters and probabilities before iteration,where control parametersF1=1.5*rand,F2=0.9*rand,probability:P1=0.2,P2=0.4,P3=0.6,P4=0.8.The global best individual is the best individual of the current population,and the global worst individual is the worst individual of the current population.Neighborhood optimal population and neighborhood worst population of each generation are composed of neighborhood optimal individuals and neighborhood worst individuals,where the neighborhood size of the individual is 5.The current population,neighborhood optimal population and neighborhood worst population rearrange every 200 generations to increase the diversity during evolution.Next is the mutation stage,in which individuals produce the next generation according to Eqs.(9~13).After mutation,record the number of times each mutation learning strategy was used and the number of fitness values improved after individuals use mutation learning strategies,so as to use roulette selection to generate selection probability.The probability boundary will be controlled and normalized.Finally,execute selection-II for the next iteration and the final test population is generated by the selection-II process in the crossover stage.

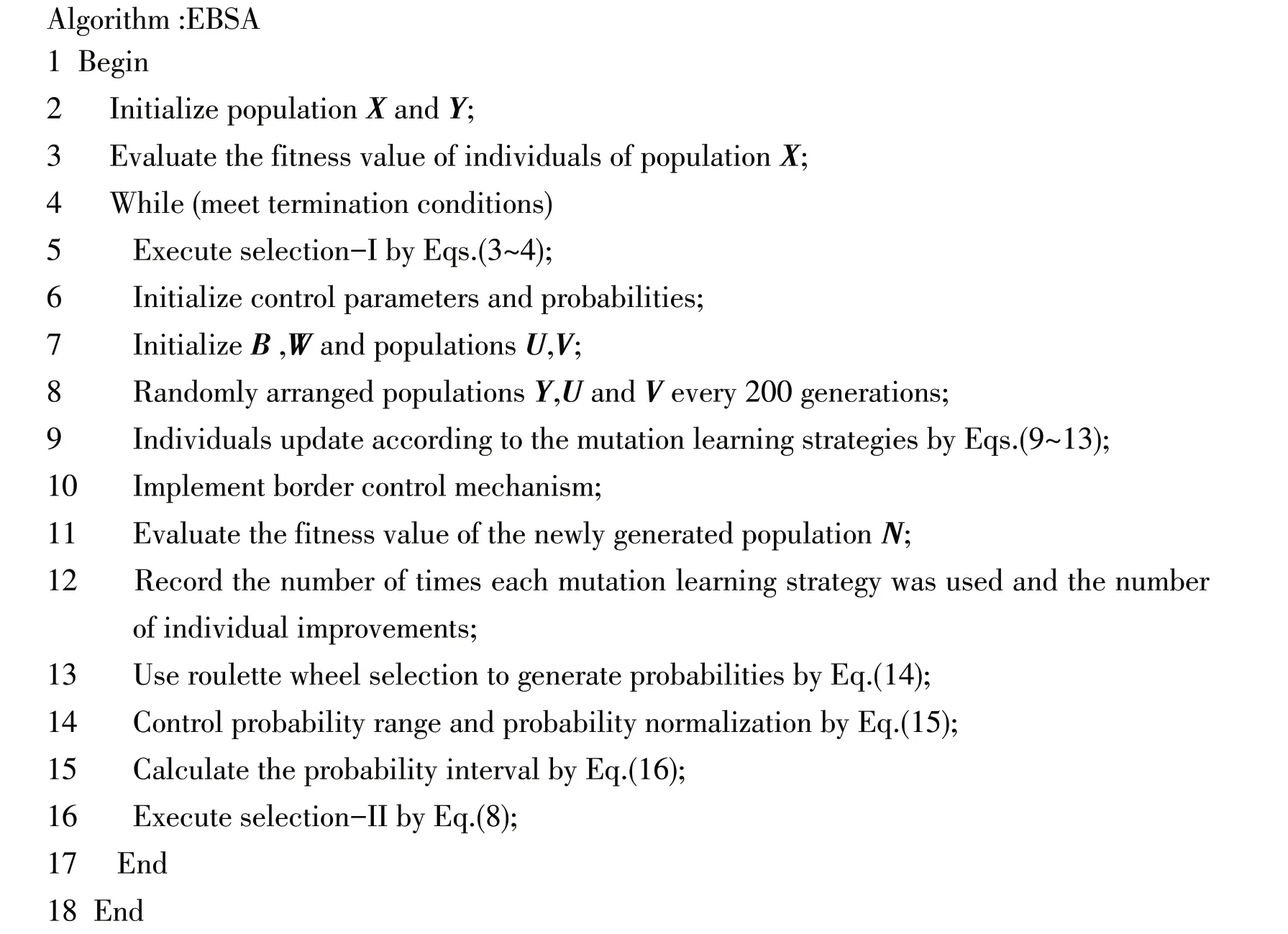

2.1 Pseudo-code of EBSA

The framework of the proposed EBSA is given in Algorithm:EBSA.

2.2 Mutation variants of BSA

Only current population information and mutation operators guide population renewal,with inadequate use of individual information and low population diversity.In order to change the deficiency of individual differences and the shortage of population convergence speed,the guidance of the neighborhood optimal individual,the neighborhood worst individual,the global optimal individual and the global worst individual are added into the basis of original individual renewal,which greatly improves the individual′s learning ability and population diversity.Five mutation learning strategies are described as follows:

1) Original mutation learning strategy:

wheretrepresents the current generation.In our experiment,the mutation control parameter is set as:F1=1.5*rand.

The lack of diversity in the evolutionary process can lead to local optima,thus making the optimization performance of the algorithm insufficient.The next two mutation learning strategies are more suitable for solving some problems with high complexity by learning from neighborhood individuals,which increases the convergence speed and improves the diversity problem at the same time.In our experiments,the mutation learning strategy of adding neighborhood optimal individuals plays a great role in solving multi-modal and hybrid functions,while the mutation learning strategy of adding neighborhood worst individuals is more effective in solving composition functions.

2)Mutation learning strategy with the neighborhood optimal individual:

whereUtis the local optimal individual from its neighbors in thetth iteration and another mutation control parameterF2is set as:F2= 0.9*rand.

3)Mutation learning strategy with the neighborhood worst individual:

whereVtis the local worst individual from its neighbors in thetth iteration andYt-Vtrepresents the current individual evolving in the opposite direction of the neighborhood worst individual.

Adding global individuals can improve the convergence accuracy.For uni- modal functions,the convergence speed is considered more than population diversity.Therefore,to improve the slow convergence speed and the lack of diversity of the original BSA,the global optimal individual and the global worst individual were added in the following two mutation learning strategies.The mutation learning strategy of adding the global optimal individual is effective in solving the uni-modal problems.In our experiments,the mutation learning strategy of adding the global worst individual can increase the diversity to some extent.

4)Mutation learning strategy with the global optimal individual:

whereBtrepresents the global optimal individual in thetth iteration.5)Mutation learning strategy with the global worst individual:

whereWtrepresents the global optimal individual in thetth iteration.Yt-Wtrepresents the current individual evolving in the opposite direction of the global worst individual.

In the experiment,five different mutation learning strategies are integrated into EBSA to guide the evolution of individuals effectively,thus the learning ability of individuals can be improved greatly.

2.3 Integrated selection mechanism

To overcome the shortcomings of the original BSA in terms of underutilization of information in the mutation stage,the ensemble method was extended to the BSA.In EBSA,five mutation learning strategies are recorded asA1,A2,A3,A4andA5.Here,the key to update individuals in each generation is how to select mutation learning strategies in a more effective way,which is the problem of the probability of strategy selection.To ensure fairness we set the initial probabilities ofA1,A2,A3,A4andA5to 0.2.Counters were used to record the number of times the mutation learning strategy was used and the number of individuals whose fitness value had been improved after using the mutation learning strategy.Probabilities were then generated using the roulette wheel selection.The selection probability is updated as following formula:

whereyrrepresents the number of individuals that they are updated according to the mutation learning strategy andxrrepresents the number of individuals that their current fitness value are better than their previous fitness value.

In order to prevent the proportion of mutation learning strategy from being too large or too small,the range of probability values is limited so that all five mutation learning strategies can be selected to update individuals.In addition,excellent learning strategies will also receive rewards,increasing the probability to 0.3.Therefore,it avoids the absolute dominance of one learning strategy throughout the evolutionary process and reduces the diversity of the population.In a word,the final selection probability follows the following rules:

whereris an integer within[1,5].Prrepresents the probability of selecting therth mutation learning strategy.

The probability generated by Eq.(14)is not the final probability and needs to be normalized.The following is the processing:

In subsequent iterations,individuals select the mutation learning strategy to update the population according to the probability interval generated by roulette wheel selection.

3 Simulation

3.1 Evaluation function and parameter setting

In this section,CEC2014 test suit is tested in order to verify the feasibility and optimize the performance of EBSA.To ensure fairness and effectiveness of EBSA,the comparison algorithm is BSA and its variants LBSA,HHBSA and KLBSA.All algorithms were performed under MATLABR2019b.For each algorithm,the population size is to set to 50,the evaluation times are 150 000 times,and it runs 30 times independently.

3.2 Comparison results of 30D in CEC2014

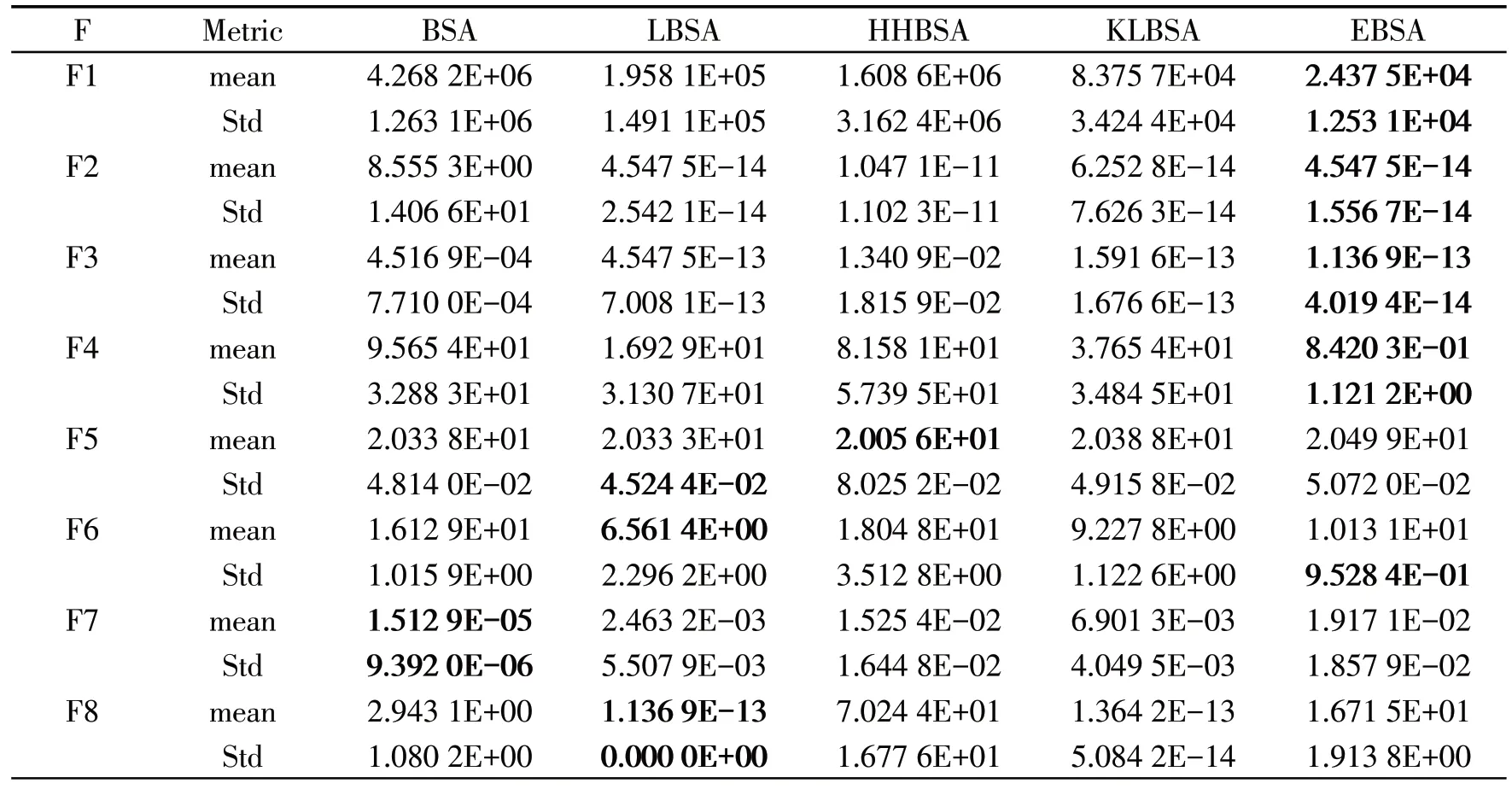

The test results for the CEC2014 test suit are shown in Table 1 and the best values in the table are bolded.

Table 1 30-D comparison results of EBSA and other 4 algorithms on CEC2014 test suit

Continued

From table 1,it can be observed that EBSA performed best and ranked first in 14 functions compared to BSA and its variants.EBSA performs best in all uni-modal functions(F1~F3).For multi-modal functions(F4~F16),EBSA has the best values in functions F4,and F14~F15,and BSA performs best in function F7 and F13.HHBSA performs best in function F5.For functions F6,F8~F9 and F10~F12,LBSA has the best value.KLBSA has the best values in functions F16.We see that EBSA performs well for all hybrid functions(F17~F22).For the composition functions(F23~F30),HHBSA has the best value in functions F23~F25 and BSA performs best in function F27.For function F28,LBSA has the best value and EBSA performs best in function F26 and F30.In terms of standard deviation(Std),we can see that EBSA performs well in 13 functions(F1~F4,F6,F12~F13 and F17~F22),BSA performs well in 7 functions(F7,F9,F11,F14,F16,F26,F27),and LBSA is better than other algorithms in 5 functions(F5,F8,F10,F23,F28).This means the stability of EBSA is perfect and the stability of LBSA and BSA is also good.

Fig.1 shows the convergence curves of these algorithms on the CEC2014 test suit.Corresponding convergence curves are selected from six different functional functions.Function F1 is selected for unimodal functions,function F4 is selected for simple multi-modal functions,functions F17 and F21 are selected for hybrid functions,and functions F26 and F30 are selected for composition functions.From the convergence curves of these 6 functions,it can be seen that the increase of diversity makes EBSA converge faster than other algorithms in the early stage.Besides,although the overall optimization of EBSA is superior to other 4 algorithms,the optimization of simple multi-modal functions is still insufficient,which is also the part to be improved in the future.Some convergence curves are shown as follows.

Fig.1 Convergence curves of 5 algorithms for 6 functions

4 Conclusion

The ensemble method is extended into BSA to improve its optimization performance.The guidance of global and local information is used to maintain the diversity of the population,the rate of convergence is accelerated,and the optimization performance of EBSA is improved.The selection probability of mutation learning strategy is determined by the change of individual fitness value and roulette wheel selection.The adaptive update of the selection probability of mutation learning strategies can guide the evolution of the population more effectively.The 30-Dsimulation experiment was conducted on the CEC2014 test suit.The results showed that EBSA had better optimization performance than other advanced algorithms.Although the optimization performance of EBSA has surpassed that of BSA and its variants,there is still room for improvement of other advanced algorithms.Future work will include further evaluating and evaluating EBSA on other test suites,analyzing its computational complexity,and extending it to different practical applications,such as optimizing artificial neural networks,image processing,and more complex industrial engineering problems.